- Valley Recap

- Posts

- ✍🏼 AI Workloads Are Rewriting Hyperscale Strategy 🔭 Bay Area Startups Collectively Secured $2.64B

✍🏼 AI Workloads Are Rewriting Hyperscale Strategy 🔭 Bay Area Startups Collectively Secured $2.64B

AI Workloads Are Rewriting Hyperscale Strategy

The past two years of AI infrastructure build-out have been driven by urgency. Hyperscalers rushed to secure GPUs, expand training clusters, and stand up new capacity wherever power and real estate were available. McKinsey’s latest analysis on AI workloads and hyperscaler strategy shows that this first phase is ending.

What comes next is more deliberate. AI is no longer being treated as a special project inside cloud organizations. It is becoming the workload that defines how infrastructure is planned, financed, and deployed.

This shift has clear implications for where data centers are built, how they are designed, and what constraints now matter most.

AI is now the main driver of data-center demand

For most of the last decade, data-center growth followed broad cloud adoption. SaaS, streaming, and enterprise IT migrations set the pace. That model is changing.

McKinsey estimates that AI workloads will push total data-center power demand close to three times today’s level by the end of the decade. Hyperscalers are expected to control most of that capacity, either through ownership or long-term leases that function like owned infrastructure.

This concentrates both risk and opportunity. Capital planning, site selection, and supply-chain strategy are now being shaped by a narrower set of use cases with far higher technical requirements than traditional cloud workloads.

Inference overtakes training

Training built the first generation of AI infrastructure. Inference will account for most of the growth going forward.

Inference workloads will surpass training workloads before 2030. This reflects how AI is being deployed across products and services. Models are no longer used primarily in scheduled training cycles. They are embedded in search, support, recommendations, and internal tools that run continuously.

The infrastructure consequences are straightforward. Training favors large, centralized campuses with high power density and specialized cooling. Inference favors proximity. Latency, network access, and reliability matter more than maximum rack density.

Hyperscalers are responding by building two different physical systems at the same time. Large remote campuses optimized for training and smaller, distributed sites designed for inference. The cloud may still look centralized from a software perspective, but physically it is becoming more distributed again.

Reliability becomes a business issue

As AI moves into revenue-generating workflows, the cost of downtime changes.

Outages that once affected internal tools or background services now affect customer-facing systems. McKinsey highlights a growing shift toward higher availability standards for inference environments, including full redundancy across power, cooling, and network paths. When AI systems handle customer interactions, transactions, or operations, infrastructure resilience becomes a commercial requirement, not just a technical one.

Two infrastructure networks, not one

A central theme in the report is the emergence of a dual approach to hyperscale infrastructure. On one side are very large training campuses built in regions with access to power and land. On the other are inference-focused facilities closer to users, data, and network hubs.

This challenges the assumption that hyperscale always means centralization. The next phase of hyperscale is split by function. Centralized where scale matters, distributed where response time matters. For colocation providers, interconnection platforms, and metro data-center operators, this is not a marginal change. It expands the role of regional infrastructure rather than reducing it.

Power becomes the binding constraint

If there is one factor that now shapes hyperscaler strategy more than any other, it is access to electricity. McKinsey is explicit that grid congestion, long interconnection queues, and permitting delays are now among the biggest limits on AI expansion. In established markets, these constraints are often harder to solve than real-estate or capital challenges.

Hyperscalers are responding in two ways. First, by moving into secondary markets where power can be delivered faster. Second, by becoming more involved upstream in energy strategy, including long-term power agreements, on-site generation, and storage.

Energy is no longer treated as a utility input. It is being treated as a strategic resource that shapes competitive positioning.

Faster builds, shorter planning cycles

To meet demand, hyperscalers are changing how they deploy infrastructure.

McKinsey points to a shift toward leasing, modular construction, prefabrication, and phased campus development. The goal is speed, not architectural perfection. Facilities are increasingly designed to be upgraded over time rather than built once to a final state.

Existing data centers are also being pushed harder. Liquid cooling retrofits, higher rack densities, and upgraded power systems are extending the life of sites that were never designed for AI workloads.

Infrastructure planning is starting to resemble product development. Launch quickly, improve continuously.

Hyperscalers move closer to utilities

One of the longer-term implications in the report is that hyperscalers are taking on a role that looks increasingly like infrastructure providers rather than just cloud platforms.

They influence where power is generated, how grids are expanded, and how large-scale infrastructure is financed. In some regions, hyperscalers now act as anchor customers around which entire energy and data-center ecosystems are built.

This does not make them utilities in a regulatory sense. But it does place them in a position of structural influence that extends well beyond software and services.

What this means for the Valley

AI is no longer an overlay on cloud infrastructure. It is reshaping the foundation. Inference is becoming the dominant workload. Reliability is becoming a business requirement. Energy access is becoming the main constraint. Hyperscalers are redesigning how they build, where they build, and what they control.

The next phase of AI will not be decided only by model performance or chip roadmaps. It will be decided by who can secure power, deploy infrastructure quickly, and operate it with the reliability that AI-driven services now demand.

That is the real shift behind the charts.

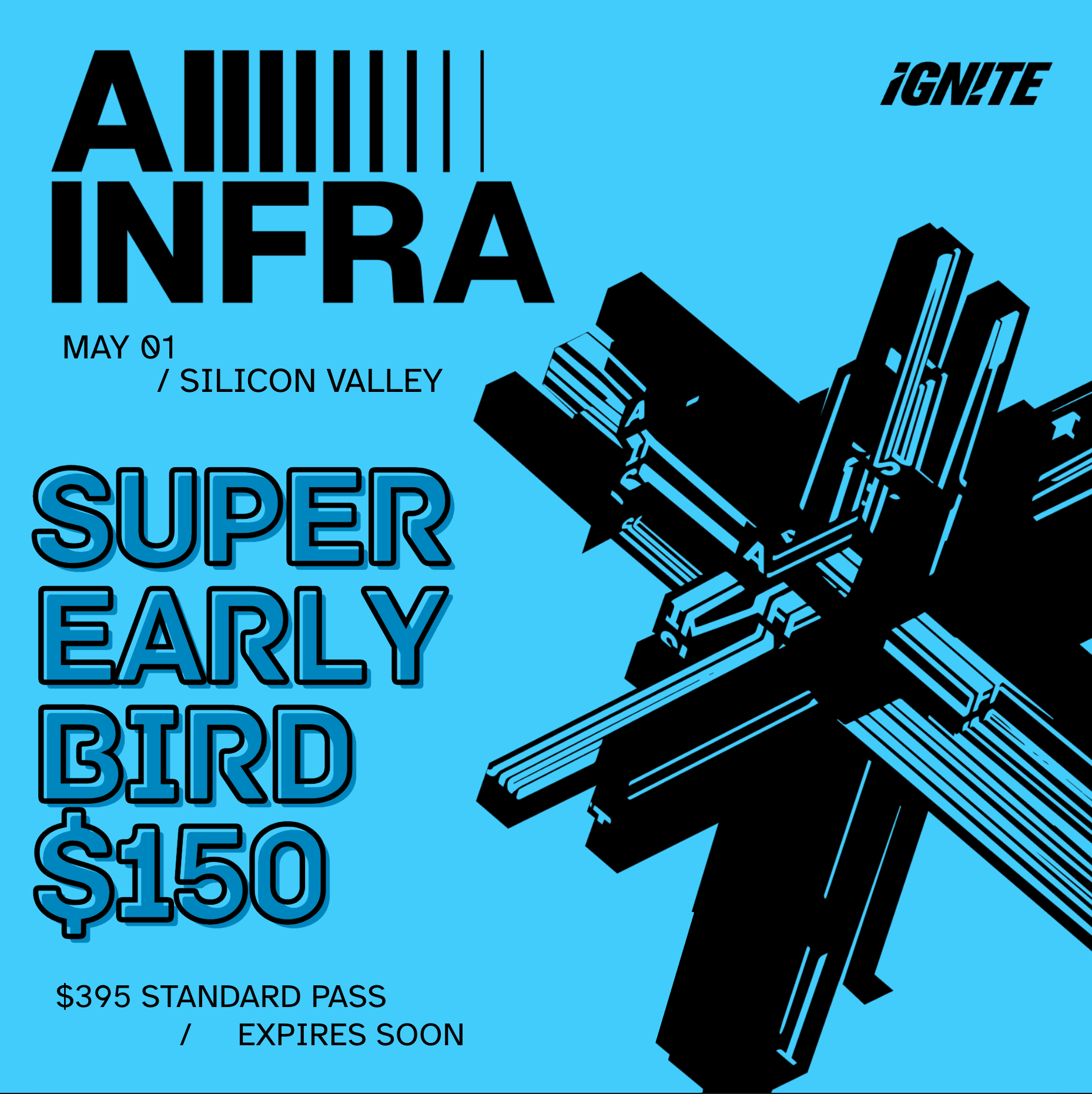

NRF Networking Party // New York City // Jan11

Upcoming Events

Bay Area Startups Collectively Secured $2.64B+ in January Week 2

This week's deals collected $2.64B for Silicon Valley startups, taking the total for the month to $24.71B. That's a new record for total funding in the month of January, more than $5B over January 2022. Eight of those fundings were megadeals that made up 74% of the total funding, including $500M Series B to Etched , $400M Series to Clickhouse (just a few months after their Series C) and $252M seed round to Merge Labs.

Founder Alert: Part 10 of Legal Foundations, from Seed to Sale, covering legal essentials for startups, is next Wednesday, Jan. 21 at noon PST with Roger Royse and Bob Karr. Part 10 covers mergers and acquisitions for startups, including deal process, structure, negotiation, compliance, tax and more. You can register here.

Follow us on LinkedIn to stay on top of SV funding intelligence and key players in the startup ecosystem.

Early Stage:

Merge Labs closed a $252M Seed, developing new approaches to brain-computer interfaces that interact with the brain at high bandwidth and integrate with advanced AI.

Higgsfield AI closed a $80M Series A, an AI-native generative video platform built for creators, brands, agencies, and marketing teams producing high-fidelity videos at scale.

depthfirst closed a $40M Series A, an applied AI lab developing novel security solutions for businesses facing modern, AI-era threats to their systems.

Transition Metal Solutions closed a $6M Seed, develops custom chemical additives that enhance wild microbial communities for metal extraction.

ModeInspect closed a $3.4M Seed, empowers large design teams and their core workflows from prototyping, design QA to designing full fledged features with AI.

Growth Stage:

ClickHouse closed a $400M Series D, a fast, open-source columnar database management system built for real-time data processing and analytics at scale.

Alpaca closed a $150M Series D, a self-clearing broker-dealer and a global leader in brokerage infrastructure APIs providing access to stocks, ETFs, options, and fixed income.

DeepGram closed a $130M Series C, the real-time API platform underpinning the Voice AI economy.

Harmonic Math closed a $120M Series C, developing Mathematical Superintelligence (MSI), which is rooted in mathematics and which guarantees accuracy and eliminates hallucinations.

WitnessAI closed a $58M Series B, the unified AI security and governance platform enterprises trust to govern and protect all AI activity.

HirePilot is an AI-powered recruiting CRM and ATS built for recruiters, consultants, and hiring partners who need a better way to manage sourcing, outreach, and hiring pipelines. The platform brings structure and automation to every stage of the recruiting and consulting workflow from prospecting and engagement to pipeline management and placement.

What HirePilot Delivers

AI-assisted sourcing and outreach to identify, contact, and engage candidates and prospects Recruiting CRM + ATS to manage leads, candidates, roles, and client pipelines in one system Automated workflows and follow-ups that reduce manual work and keep deals and searches moving Collaborative tools for teams and consultants to track conversations, stages, and decisions Custom dashboards and analytics to monitor activity, performance, and pipeline health in real time

Why It Matters

Recruiting and consulting workflows are often spread across inboxes, spreadsheets, LinkedIn, and disconnected tools. HirePilot replaces that fragmentation with a centralized, AI-driven system that helps recruiters and consultants operate faster, stay organized, and scale their impact without added complexity.

Who It Serves

Recruiters, staffing agencies, independent consultants, and in-house hiring teams who want a smarter, more efficient way to manage sourcing, outreach, and hiring pipelines all from a single platform.

Your Feedback Matters!

Your feedback is crucial in helping us refine our content and maintain the newsletter's value for you and your fellow readers. We welcome your suggestions on how we can improve our offering. [email protected]

Logan Lemery

Head of Content // Team Ignite

Hiring in 8 countries shouldn't require 8 different processes

This guide from Deel breaks down how to build one global hiring system. You’ll learn about assessment frameworks that scale, how to do headcount planning across regions, and even intake processes that work everywhere. As HR pros know, hiring in one country is hard enough. So let this free global hiring guide give you the tools you need to avoid global hiring headaches.