- Valley Recap

- Posts

- ⚔️The AI Land War is brewing💰Bay Area Startups Collectively Secured $19B

⚔️The AI Land War is brewing💰Bay Area Startups Collectively Secured $19B

AI infrastructure is running into a hard physical limit that software, capital, and silicon cannot overcome: large‑scale, reliable electricity. In 2026, advantage in AI is increasingly defined in megawatts, not model parameters.

From land wars to power bottlenecks

For a decade, developers competed for prime sites in hubs like Northern Virginia. Today, those markets are constrained not by acreage but by power availability. Dominion Energy expects electricity demand in Virginia driven largely by data centers to nearly double from 17 GW in 2023 to 33 GW by 2048. A JLARC study warns that grid and transmission upgrades are already struggling to keep pace with planned data center capacity.

Interconnection delays have become the gating factor for scale. Across several U.S. regions, 100+ MW projects face four‑ to seven‑year timelines for needed transmission, substations, and approvals. AI hardware cycles, by contrast, turn over every 12–24 months. GPUs depreciate quickly, shells can be built in a year, but utility infrastructure moves at public‑sector speed creating unprecedented stranded capacity risk: powered‑off compute waiting on electrons.

“Dirt to Token” as the new stack

AI compute now operates as an industrial system built on a physical stack: land, generation, storage, transmission, and only then compute. Loudoun County “Data Center Alley” is the leading indicator: Dominion began constraining power allocations in 2021, and county strategy documents explicitly flag power as the central risk to future growth.

Developers are responding with vertical integration “dirt to token” strategies that secure:

Land adjacent to high‑capacity transmission and fuel infrastructure.

Long‑term power, including on‑site or directly connected generation.

Storage and microgrids to stabilize 24/7 AI workloads.

In this model, power is no longer a pass‑through utility. It is the primary input that sets the marginal cost of intelligence.

Nuclear as baseload for AI

The duty cycle of AI workloads is pushing hyperscalers toward nuclear as a foundational energy source. Global data center electricity use is projected to grow from roughly 460 TWh in 2024 to more than 1,000 TWh by 2030, and to around 1,300 TWh by 2035 nearly tripling in a decade. Training clusters and high‑volume inference fleets require round‑the‑clock, firm power that solar and wind alone cannot guarantee.

In response, large technology companies are signing nuclear‑linked deals at gigawatt scale. AWS entered a 10‑year agreement with Talen Energy for a 960 MW campus adjacent to the Susquehanna nuclear plant in Pennsylvania. Microsoft has structured contracts with Constellation Energy tied to the restart and continued operation of nuclear assets to support 24/7 cloud workloads. Industry estimates suggest hyperscalers have now contracted more than 10 GW of nuclear capacity, including early‑stage small modular reactor pathways.

These 15–20‑year contracts effectively align nuclear baseload with the depreciation cycle of AI infrastructure, turning fission into the anchor of the AI load curve.

Behind‑the‑meter power and microgrids

Nuclear is a long‑cycle solution. To move faster, developers are increasingly building behind‑the‑meter generation for 500 MW‑plus campuses. Roughly one‑third of proposed U.S. data center capacity around 48 GW now plans to use on‑site or directly connected plants to partially “skip the grid,” up from under 2 GW in late 2024.

New campuses are being designed around dedicated gas‑fired plants approaching 1 GW, large‑scale batteries, and hybrid microgrids that blend gas, solar, wind, and storage. This collapses the distinction between data center developer and independent power producer: capital plans now include substations, grid studies, fuel logistics, and multi‑gigawatt generation alongside racks and cooling.

Power as competitive moat

Site selection criteria are shifting from “fiber and tax incentives” to energy system fundamentals. In Virginia alone, meeting projected data‑center‑driven demand could require 57–60 GW of new capacity by 2040 roughly three times Dominion’s 2023 generation fleet. Leading operators now prioritize:

Proximity to robust transmission and substations.

Access to gas pipelines or nuclear capacity for firm supply.

Permissive regimes for on‑site generation and innovative tariffs.

For large‑scale AI, the cost curve is migrating from silicon to electrons. Developers that control their own generation timelines and pricing gain structural advantage over peers locked into congested grids and utility queues. The land war persists, but in 2026, “dirt to token” energy strategy has become the bottleneck, the cost curve, and the enduring competitive moat.

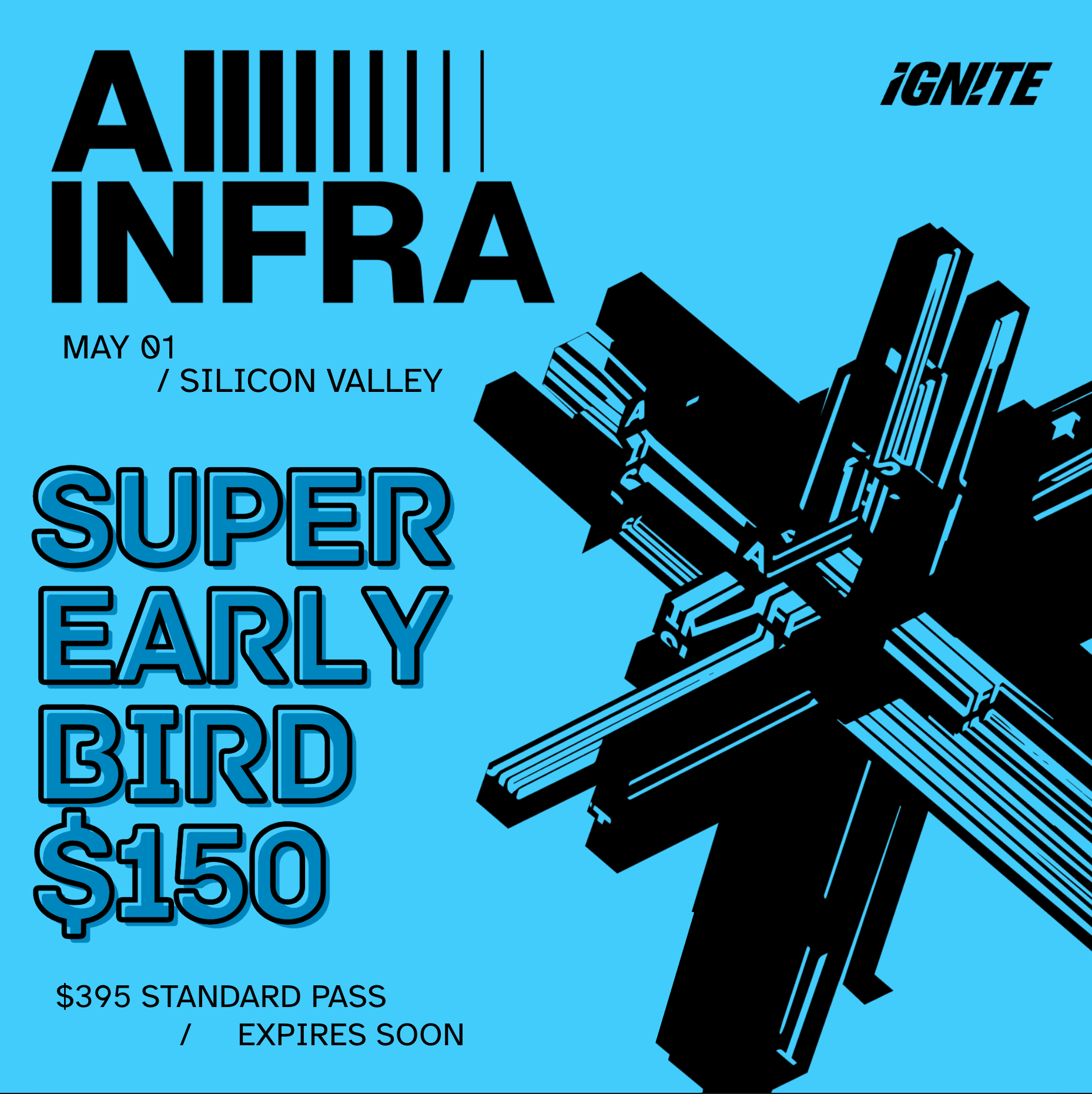

Upcoming Events

Bay Area Startups Collectively Secured $19B in Feb Week1

February opened to a blazing fast start in funding activity, with deals that totaled $19B, already setting a new record for the month. Ten megadeals represented 95% of the total, with Waymo's $16B Series D at the top of the list, followed by Cerebras Systems $1B Series H, Bedrock Robotics, $270M Series B and seven more.

Exits: The eight acquisitions this week were all for undisclosed amounts, while two IPOs raised a total of $579M. A third IPO, Liftoff Mobile, was postponed due to market volatility. Of the two IPOs, Once Upon a Farm opened and rose slightly; Eikon Therapeutics opened below its offer price and fell further. Eikon's IPO was a “down round” IPO, with a valuation less than a third of the $3.5B valuation from the company's Series D funding in early 2025.

Follow us on LinkedIn to stay on top of SV funding intelligence and key players in the startup ecosystem.

Early Stage:

Fundamental Technologies closed a $225M Series A, developing Large Tabular Models purpose-built for the structured records that contain trillions of dollars in business value.

Resolve AI closed a $125M Series A, AI for running and operating software in production.

Gruve closed a $50M Series A, helps enterprises, neoclouds and AI startups build and operate infrastructure-to-agent AI solutions.

Powerline inc. closed a $7M Seed, develops AI systems for monitoring and operation of energy assets.

Airrived closed a $6.1M Seed, the Agentic OS, redefining how enterprises run cybersecurity, IT, and business operations in the agentic era.

Growth Stage:

Cerebras Systems closed a $1B Series H, building a new class of AI supercomputer.

Bedrock Robotics closed a $270M Series B, building the future of autonomous construction.

Goodfire closed a $150M Series B, building the next generation of safe and powerful AI—not by scaling alone, but by understanding the intelligence we're building.

Lunar Energy closed a $102M Series D, develops intelligent software and advanced hardware to electrify homes and connect communities to form clean, resilient virtual power plants.

Midi Health closed a $100M Series D, provides accessible, insurance-covered services in all 50 states – designed by world-class medical experts.

AMD

AMD is expanding its footprint in AI and high-performance computing with a broad portfolio of CPUs, accelerators, and system platforms that support workload scale from cloud and data centers to edge and client devices.

Recent announcements introduced new rack-scale platforms built on EPYC server CPUs and Instinct GPU accelerators, along with next-generation tools aimed at enterprise and hyperscale AI deployments. Management has also shared plans for continued investment across CPUs, GPUs, NPUs, and networking infrastructure to support what it calls the next phase of global compute demand. AMD’s data center business delivered strong growth, driven by broader adoption of EPYC and Instinct products as AI workloads scale, and executives have projected robust future increases in data center revenue as new silicon and systems reach production.

Why It Matters

Companies building AI infrastructure need options beyond traditional architectures. AMD’s strategy positions it as a diversified compute provider across servers, accelerators, and client-side AI hardware while maintaining momentum in data center sales and partnerships that support large-scale deployments.

Who It Serves

Cloud providers, enterprise data centers, AI developers, OEM partners, and organizations seeking a range of performance-optimized compute solutions.

Learn more: amd.com

Your Feedback Matters!

Your feedback is crucial in helping us refine our content and maintain the newsletter's value for you and your fellow readers. We welcome your suggestions on how we can improve our offering. [email protected]