- Valley Recap

- Posts

- 🔥Heat Is Killing AI 💰Bay Area Startups Collectively Secured $52.5B MTD

🔥Heat Is Killing AI 💰Bay Area Startups Collectively Secured $52.5B MTD

AI infrastructure has hit a hard limit no software can evade: heat. Air cooling served the 5 to 10 kW rack era well enough. Those constraints no longer apply. Rack power demands have exploded, forcing thermal design to become a fundamental go/no-go factor for AI clusters in 2026.

The Density Explosion

Cloud workloads settled into 5 to 10 kW racks, where 15 to 20 kW marked high-end performance. AI workloads operate at entirely different scales. Production racks now average 40 to 50 kW, while leading GPU clusters routinely exceed 100 to 120 kW per rack. Roadmaps project even higher peaks.

A single training pod generates more heat across a few square meters than entire legacy enterprise halls once managed. Traditional hot aisle/cold aisle systems and CRAH units simply lack the capacity to shift that volume of energy. Operators can apply workarounds like rear-door heat exchangers or aggressive fan curves, but those measures cap out around 30 to 40 kW per rack. Beyond that threshold, diminishing returns turn into outright failure.

The Retrofit Crisis

The global data center inventory reflects yesterday's needs. While hyperscalers reserve high-density zones in newer facilities, most existing sites adhere to 5 to 15 kW design limits. Cooling tonnage represents only one barrier among several structural realities.

Floor loading presents the first insurmountable challenge. Modern racks, containment structures, and liquid distribution gear combine to exceed 3,000 pounds per unit. Legacy raised floors rate for 150 pounds per square foot and buckle under sustained high-density deployments.

Piping infrastructure compounds the issue. Liquid systems demand dedicated CDUs, manifolds, and runs to chillers or dry coolers, none of which exist in facilities plumbed for air-only operations. Retrofitting live environments triggers extended downtime, skyrocketing costs, and partial compromises at best.

Power delivery and redundancy complete the picture. Even perfect cooling proves useless against 15 to 20 kW feeds. Operators can isolate small high-density pods within older shells, but wholesale conversion from 2012-era enterprise data centers to 2026 AI campuses requires full demolition and rebuild. The internet's physical backbone must evolve or obsolesce.

Liquid Cooling as Operational Mandate

Liquid cooling once confined itself to HPC labs and experimental clusters, promising superior thermals but carrying a reputation for complexity. That perception has evaporated.

Direct-to-chip systems deliver coolant precisely to heat sources, extracting tens of kilowatts per server with far greater efficiency than air. Immersion setups submerge entire systems in dielectric fluid, enabling extreme densities through simplified mechanics and reduced airflow dependency. Liquid's heat capacity supports hotter components in compact footprints, while slashing fan power and stabilizing performance.

By 2026, liquid cooling defines the baseline for dense AI deployments. Roughly 80 percent of new facilities integrate it from initial design rather than as an afterthought. Air persists for lighter ancillary loads, but GPU-heavy zones demand liquid capacity upfront.

The Revised Facility Stack

This shift ripples through every layer of campus design. Shells now accommodate elevated floor loads, streamlined airflow paths, and embedded liquid corridors from the outset. Mechanical plants scale for elevated return temperatures, with loops optimized for CDU feeds rather than broad air distribution.

Server and rack architectures evolve in tandem, as OEMs standardize liquid-ready configurations for flagship AI hardware. Business-park air-cooled facilities fade from relevance. Market value concentrates in purpose-built campuses where thermal, power, and compute systems integrate as a unified whole.

Thermal Implications for Operators

Operators targeting meaningful AI scale face stark choices. New campuses must treat liquid cooling as foundational infrastructure. Partial retrofits consign most existing square footage to legacy status. Opting out forfeits access to the era's most lucrative workloads.

Liquid cooling stands as thermodynamics' verdict on the AI age. The industry confronts a thermal wall that demands passage on physics' terms alone.

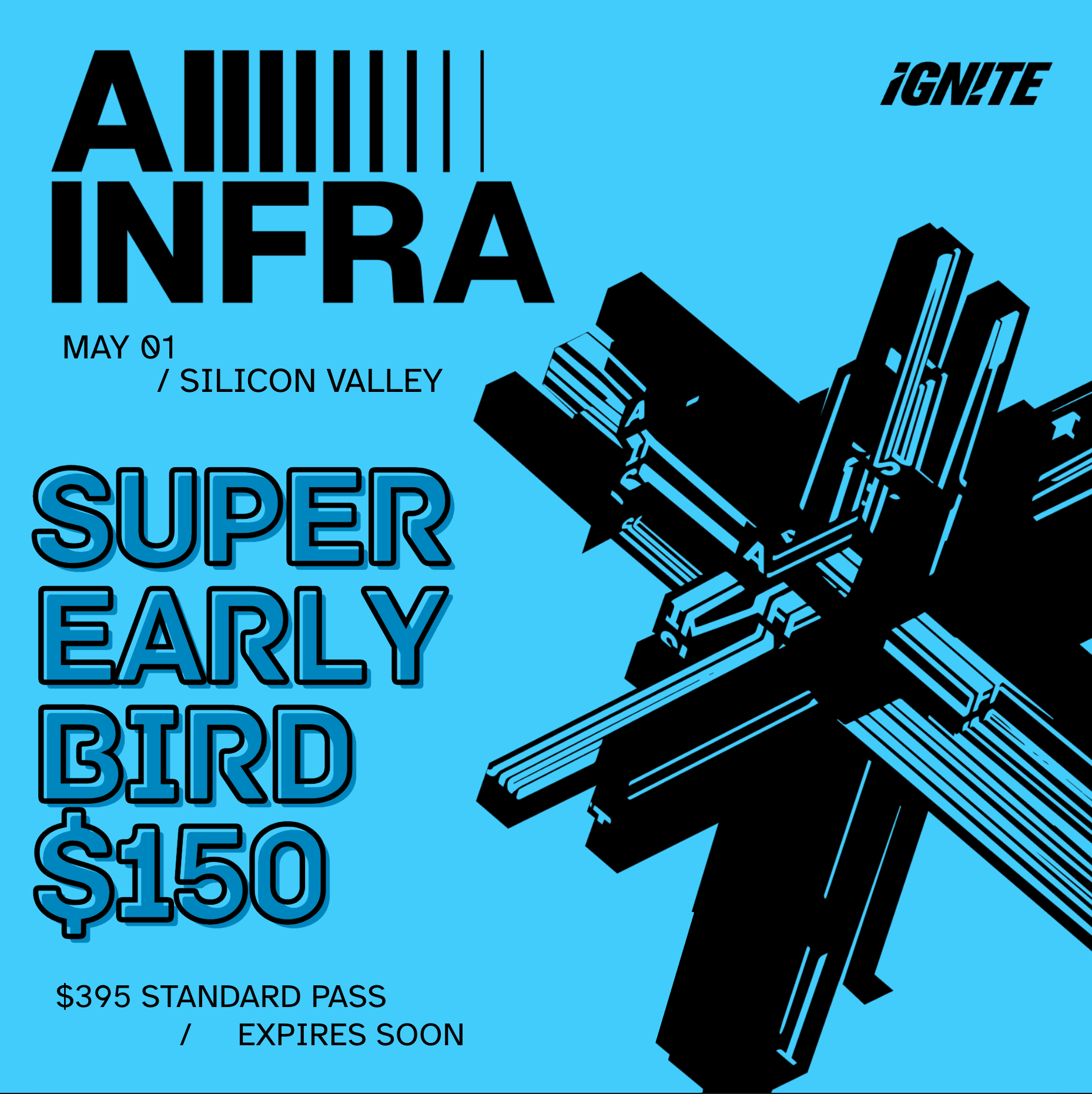

Upcoming Events

Bay Area Startups Collectively Secured $52.5B MTD In February

February funding activity continued to set new records, closing the week at $1.02B and taking the month to $52.5B. That takes our 2026 Q1-to-date figure over $82B, already more than the full quarter total for Q1 2025. And the level of funding activity - particularly in megadeals - shows no sign of abating. OpenAI is said to be nearing their first close on a $100B round (which would set a new record) with Amazon, Softbank and NVIDIA in talks to invest up to $50B, $30B and $20B respectively. Microsoft is also in the mix, along with VC firms and sovereign wealth funds, making it possible the round will exceed OpenAI's target.

For startups raising capital: Stay on top of who's raising, who's closing and who's investing with the Pulse of the Valley weekday newsletter. Founders get the newsletter, database and alerts for just $7/month ($50 value). Check it out, sign up here.

Follow us on LinkedIn to stay on top of SV funding intelligence and key players in the startup ecosystem.

Early Stage:

Cogent Security closed a $42M Series A, building autonomous agents that close the execution gap in cybersecurity.

Kana closed a $15M Seed, an agentic marketing platform powering brands to win in the agent economy.

ZaiNar closed a $10M Series A, foundation layer for Physical AI, transforming 5G and WiFi infrastructure into a comprehensive spatial sensing system.

Skygen.AI closed a $7M Seed, specializing in the creation of autonomous software agents.

Sift Biosciences closed a $3.7M Pre-Seed, developing a new class of T-cell modulators to treat cancers and autoimmune diseases by leveraging AI.

Growth Stage:

World Labs closed a $1B Series C, building frontier world models that can perceive, generate, reason, and interact with the 3D world.

Heron Power closed a $140M Series B, enables renewable energy, storage, and datacenter developers to directly connect to medium voltage transmission without using a transformer.

Render closed a $100M Series C, build and scale applications and websites with a global CDN, DDoS protection, preview environments, private networking, and Git auto deploys.

Braintrust AI closed a $80M Series B, the AI observability platform helping teams measure, evaluate, and improve AI in production.

ProSomnus Sleep Technologies closed a $38M Series Unknown, the leading non-CPAP OSA therapy® for the treatment of Obstructive Sleep Apnea.

Vultr is a global cloud infrastructure provider focused on simplicity, performance, and predictable pricing. The platform delivers fast compute, storage, and networking services designed to support startups, developers, and enterprises with flexible cloud resources across the world.

What Vultr Delivers

• Bare metal, virtual machines, and dedicated cloud compute options

• High performance block storage and object storage solutions

• Global data center footprint that enables low-latency deployment

• Consistent pricing without hidden fees for predictable infrastructure cost

Why It Matters

As applications scale and workloads become more demanding, teams need cloud infrastructure that is reliable, performant, and easy to deploy. Vultr helps companies of all sizes move faster with cloud instances that can be provisioned in minutes and scaled with demand.

Who It Serves

Developers, startups, SaaS businesses, and enterprises looking for foundational cloud infrastructure they can trust for production workloads, edge use cases, and global distribution.

Vultr brings simple, powerful cloud infrastructure to teams building the next generation of applications.

See more at https://www.vultr.com/

Your Feedback Matters!

Your feedback is crucial in helping us refine our content and maintain the newsletter's value for you and your fellow readers. We welcome your suggestions on how we can improve our offering. [email protected]

Logan Lemery

Head of Content // Team Ignite

Ship the message as fast as you think

Founders spend too much time drafting the same kinds of messages. Wispr Flow turns spoken thinking into final-draft writing so you can record investor updates, product briefs, and run-of-the-mill status notes by voice. Use saved snippets for recurring intros, insert calendar links by voice, and keep comms consistent across the team. It preserves your tone, fixes punctuation, and formats lists so you send confident messages fast. Works on Mac, Windows, and iPhone. Try Wispr Flow for founders.