- Valley Recap

- Posts

- Why proximity, hierarchy, and caching define the next era of AI infrastructure🔭 Bay Area Startups Collectively Secured $25B+ 💰

Why proximity, hierarchy, and caching define the next era of AI infrastructure🔭 Bay Area Startups Collectively Secured $25B+ 💰

Serving Intelligence: From Training Clusters to Inference Grids

Why proximity, hierarchy, and caching define the next era of AI infrastructure

By Jay Chiang

Nov 16, 2025

Jay Chiang, Series: AI Infrastructure Research №3 (2025)

Image created with AI — Inference as a Grid

The Architecture Was Built for Training

AI infrastructure today reflects the training era’s requirements. Massive centralized clusters, synchronized execution across thousands of GPUs, and relentless optimization for utilization and throughput. This architecture succeeded brilliantly. Training frontier models (newest, largest, most advanced models) demands coordinating 10,000+ GPUs in lockstep, maintaining high-bandwidth GPU-to-GPU communication, and maximizing every hour of expensive compute time. So we build where power is cheap. We keep GPUs busy. And we batch everything.

Inference operates under fundamentally different constraints.

Millions of users arrive asynchronously, each demanding sub-200ms responses. Data sovereignty regulations prohibit cross-border queries. Latency requirements make geographic positioning non-negotiable. Responsiveness trumps utilization. A GPU sitting idle but ready for burst traffic serves users better than a fully utilized GPU queuing requests.

This isn’t just a workload difference. It’s the same distinction the electrical industry faced a century ago.

Power generation happens in massive centralized plants optimized for efficiency and scale. But power distribution requires a hierarchical network of transmission lines, regional substations, and local transformers, all positioned near demand, not near cheap fuel.

Our previous article showed that training data centers cannot simply be repurposed for inference deployment. This article explains why that architectural mismatch is fundamental — and what infrastructure profile actually succeeds.

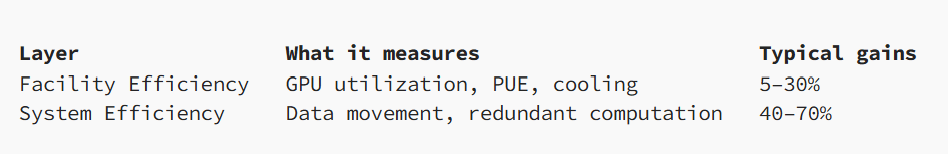

Common data center metrics coined at training era focuse on visible ones, such as GPU utilization, PUE (Power Utilization Efficiency), tokens per second. According to Uptime Institute’s Global Data Center Survey 2024, median hyperscale PUE has plateaued between 1.15 and 1.25, meaning even aggressive facility optimization only yields 5–20% additional efficiency headroom. However, the bigger saving opportunities come from reducing data movement and redundant computation, a layer almost no one measures. A few milliseconds of network latency may feel irrelevant in one run. When they compounds across millions of queries, the unnecessary network hops or remote data accesses multiply waste thousands of times daily.

Take a modest enterprise workload of one million inference requests per day. The model computation might consume roughly 3,000–6,000 kWh, but the data plane behind each request (embeddings, KV-cache reads, retrieval calls, and cross-region hops) often burns just as much energy. Move that workload from a distant cloud region to a local edge site and the physics change immediately. Round-trip distance drops, retries disappear, cache hit rates rise, and cross-region traffic collapses. In practice, this cuts data movement by about half and trims total energy by 30–50%. Add avoided egress fees, easily $150–$650 per month at this scale, and the economics compound. Proximity doesn’t just reduce latency. It removes the single biggest hidden cost of inference.

The Grid Model: Learning from Electricity Distribution

If you want to understand why inference architecture must change, look not to computing but to the power grid.

Electricity generation is centralized. Massive plants are built where energy is cheapest: Hydro valleys, desert solar farms, gas fields. But electricity consumption is distributed: homes, hospitals, factories, intersections, and devices scattered across the map. The only way to bridge those two realities is a hierarchy — high-voltage transmission, regional substations, and neighborhood transformers — each placed progressively closer to demand.

That separation isn’t aesthetic. It’s physics. Move electricity too far and you incur line losses. Deliver it too slowly and voltage sags. Push too much through the wrong tier and the grid collapses. The grid works because generation scales with efficiency, but distribution scales with proximity.

Inference follows the same laws. Training can be centralized. Inference cannot.

We don’t run high-voltage transmission directly into every home and rebuild the full electrical load at each outlet. It would be wasteful, fragile, and physically unnecessary. In the same way, sending every inference request back to a central model and recomputing retrieval, reason, and context from scratch burns energy and blows through latency budgets. Inference needs tiers, not round-trips.

Just as electrical grids aren’t optional, inference distribution won’t be, either. In many industries, regulation mandates local infrastructure. Healthcare (HIPAA), financial services (SOC 2), manufacturing (IP protection), and government workloads (FedRAMP) cannot route queries across borders or tolerate the latencies of distant cloud regions. These sectors together account for more than 40% of U.S. GDP, a reminder that the majority of economic value sits in places where centralizing inference simply isn’t allowed. The grid is distributed because society needs it. Inference will be distributed for exactly the same reason.

Three Principles of Grid-Based Inference

Just as electrical grids follow fundamental principles of physics and economics, inference grids depend on three core architectural principles:

Principle 1: Proximity Reduces Waste

The speed of light in fiber is 124 miles per millisecond. A 1,000-mile round trip imposes an unavoidable ~16 ms delay. For sub-50 ms workloads, such as fraud detection, industrial control, real-time user interaction, geography outweighs almost every other performance factor.

Moving data is even more expensive. Network transfer consumes orders of magnitude more energy and money than computation. Sending even 50 KB of context across regions often costs more than running the model itself.

The grid principle: just as electrical substations sit close to demand, inference must be deployed where users and data actually reside.

Principle 2: Hierarchy Enables Scale

Most inference doesn’t need a large-scale model. Across studies from Microsoft Azure (2024), Alibaba’s Qwen team (2024), Mistral (2023), and Databricks (2024), 60–80% of real-world queries can be handled by small-to-mid models (7B–30B) with little or no loss in quality at a fraction of the energy cost¹. Treating every request as a 70B-class problem is like powering a coffee maker from a transmission line.

The economics are stark. 7B–30B models (quantized) run on one or two mid-tier GPUs, using 5–20% of the energy and 10–30% of the cost of a 70B-class model. 70B+ models require multiple high-end GPUs and consume several hundred watts per instance. This is why production architectures increasingly converge on a two-tier hierarchy:

Tier 1: Small-to-mid models (7B–30B) at edge or metro nodes handle most routine queries, retrieval steps, and domain-specific reasoning.

Tier 2: Large-scale models (70B+) in core regional sites reserved for complex reasoning, multi-step tasks, and high-stakes decisions.

The grid principle: Keep everyday intelligence local, and use large-scale capacity only when the task truly requires it.

¹ Kernreiter et al., “Energy Efficiency of Small Language Models,” TU Wien, 2025

Principle 3: Caching Prevents Redundant Work

A customer-service chatbot handling 10,000 sessions per day typically sees 60–70% semantic overlap in user questions. But today’s RAG (Retrieval Augmented Generation) pipelines treat every session as unique, repeatedly fetching the same documents, reranking the same candidates, and reconstructing nearly identical context windows. Meta’s Retrieval-Augmented Generation at Scale (2023) study showed that 60–80% of retrieval sets in enterprise queries overlap, meaning most of this work is duplicated thousands of times.

Across a 10-node inference tier, this redundancy can easily consume 600–700 kWh/day purely on retrieval, embedding, and context assembly. Introduce even a modest 70% semantic-cache hit rate, consistent with overlap observed by Meta, Google’s enterprise QA evaluations, and Zendesk support flows, and the load drops to roughly 200 kWh/day. That’s a 60–70% energy reduction without touching the model, GPUs, or data-center design.

The grid principle: Inference nodes should reuse semantic work — retrieval, reranking, embeddings, and context assembly — instead of recomputing identical steps for every user session.

Why Training Data Centers Cannot Be Repurposed

The question seems logical: if an organization already owns a training data center, why not simply run inference workloads on it? The answer reveals why inference requires fundamentally different infrastructure.

The Physical Mismatch

Training data centers are engineered for 80–120kW rack density with direct-to-chip liquid cooling. Inference workloads on quantized models require 15–30kW racks with hybrid air/liquid cooling.

Retrofitting training infrastructure for inference densities means:

Removing liquid cooling infrastructure (sunk capital cost)

Replacing with lower-density cooling (new capital expenditure)

Underutilizing electrical capacity (designed for synchronized 100% TDP across all GPUs)

The net result is similar cost to greenfield construction, for infrastructure optimized for the wrong workload. Meta paused planned data center projects in 2024 specifically to rescope them for AI inference rather than attempt retrofits.² The economics don’t work.

² “AI Datacenter Energy Dilemma,” SemiAnalysis, March 2024

The Geographic Constraint

Training clusters locate where power is abundant and cheap, such as rural Oregon, West Texas, Iceland. These locations optimize for cost per kWh and megawatt availability. Inference must locate near users and within regulatory boundaries.

For example, healthcare inference must stay inside HIPAA-controlled environments, either on-premise or in HIPAA-compliant cloud regions, because the model’s output and intermediate states count as PHI (Protected Health Information). Financial services must be in-country for regulatory compliance. Manufacturing must be on-site for IP protection and control-loop latency.

A training cluster in rural Oregon cannot serve EU customers (GDPR prohibits cross-border data transfer), healthcare providers beyond HIPAA compliant regional boundaries, manufacturing plants beyond cannot 100ms+ latency for real-time control requirements.

What an Inference-Ready Infrastructure Actually Looks Like

If training era data centers can’t simply be repurposed for inference, what does work? The shape of the answer emerges directly from the three grid principles, proximity, hierarchy, and reuse. Here’s the profile that wins in the inference era, and why it looks almost nothing like the hyperscale campuses we spent the last decade building.

Geography: Close Enough to Matter, Cheap Enough to Build1

Inference lives on a clock the cloud cannot escape. The winning sites sit within 50–100 miles of major metros, close enough to stay under ~20ms latency budgets. It is critical to consider economic access real estate, substation capacity, and permitting timelines that won’t collapse the business plan. This geography satisfies two constraints at once: Latency (get the compute near people and data) and Sovereignty (keep inference inside the required region).

Scale: Not Mega-Campuses, but Metro-Scale Nodes

Training rewarded 100MW+ multi-billion-dollar campuses where GPUs converge like iron filings around a magnet. Inference punishes that model. The sites that work best are 2–20MW. Large enough to operate economically. Small enough to fit near metros where demand actually lives. This allows a fast build up within 12 months inside a single compliance boundary. A 10MW site often can serve the inference traffic of an metropolitan region.

Deployment: A Network, Not a Planet

A training campus costs $800M to $2.5B and takes 24 to 36 months to build. A metro-adjacent 2 to 20 MW facility typically costs $20M to $120M and deploys in 6 to 12 months. This opens the market to dozens of operators.

Training thrives in one place. Inference thrives in many. The future is a tiered grid of sites, each doing what they’re best at:

Edge nodes for small models and cached semantic memory

Regional hubs for heavier models and multi-step reasoning

Specialized sovereign facilities for healthcare, finance, defense

Ownership: The Market Opens Where Hyperscalers Can’t Go

Training consolidated power in the hands of a few because the workload rewarded scale. Inference breaks that logic, not because of economics alone, but because of jurisdiction. Inference runs in hospitals, banks, factories, public agencies, and sovereign borders. Unlike training, the decision itself is the regulated object, not just the data. This redraws the ownership map. A new class of operators will emerge precisely where hyperscalers are structurally constrained. We will see:

Regional AI infrastructures aligned to local compliance zones

Vertically specialized facilities (healthcare-only, finance-only, defense-only)

Sovereign and quasi-sovereign operators in emerging digital economies

Enterprise-controlled nodes on-premise or in metro-adjacent edge sites

Distributed franchise or partnership models hyperscale economics can’t justify

This isn’t fragmentation. It’s localization as a feature.

Capital Profile: A Different Asset Class Entirely

A training campus costs $800M–$2.5B, takes 24–36 months to build, and requires a hyperscaler level balance sheet to finance the GPUs, substations, and transmission upgrades. A metro-adjacent 2–20MW facility typically costs $20M–$120M, deploys in 6–12 months, and scales by gradual growth: open one site, fill it, replicate it in the next metropolitan ring. It is lower risk. Payback periods will be shorter. The developers can build where usage actually appears. This will opens the market to dozens of operators, including regional players, vertical specialists, and sovereign providers.

Sources: Structure Research 2024 Edge DC Model, C&W Cost Index 2024, JLL 2024 Metro Edge Builds.

The Democratization Opportunity

The 2–20MW scale, metro-adjacent positioning, and distributed deployment model create opportunities for:

Regional infrastructure providers who already own real estate near population centers and can deploy inference nodes in existing facilities or adjacent greenfield sites.

Specialized operators serving regulated industries (healthcare, finance, government) that require dedicated, compliant infrastructure rather than shared hyperscaler resources.

Franchise and partnership models where domain expertise (healthcare IT, financial services technology) combines with infrastructure operation, impossible at hyperscale economics.

The grid structure democratizes both deployment and access. Small hospitals can access on-premise inference. Regional banks can serve customers with locally compliant models. Manufacturing plants can deploy air-gapped quality control.

The Path Forward

Organizations don’t need to abandon training infrastructure. But they do need to recognize that inference requires different infrastructure with different economics. Both training clusters and inference grids are necessary. Training develops the models. Inference delivers them to users. The mistake is assuming the same infrastructure serves both purposes.

The market opportunity is in infrastructure positioned for the inference profile: distributed, proximate, compliance-ready, and capital-efficient.

The three principles of grid-based inference:

Proximity reduces waste. Data movement costs more energy than computation. Deploy inference where users and data concentrate.

Hierarchy enables scale. Tier compute by demand — small models at edge, large models at regional hubs.

Caching prevents redundant work. 60–80% of queries repeat semantic patterns. Cache computation pipelines across sessions.

This isn’t about retrofitting old infrastructure. It’s about building the right infrastructure for a new era. The companies and investors who recognize this distinction early will define the next decade of AI infrastructure.

This essay is Part 3 of the Pascaline AI Infrastructure Research Series. Part 1 introduced the AI Index. Part 2 highlighted inference challenges.

Upcoming Events

Bay Area Startups Collectively Secured $22B+ in January Week 1

January started out where December left off, with SV startups collecting more than $22B in funding in the first week. In a week with only 21 fundings, almost half were megadeals. In dollars, the megadeals made up 98% of the week's total. xAI was at the top of the list, with a $20B Series E, and followed by eight more megadeals, half of them in biotech.

Andreessen Horowitz's $18B raise in nine funds today comes after all US VC firms combined raised just $66B in 2025, the lowest since 2019. It was clear at the end of the year that the VCs who successfully raised new funds were the older, established firms; Andreessen's announcement today just reinforces that. The prolonged IPO drought and lack of liquidity has made fundraising by emerging managers - a challenging job in any environment - much, much tougher.

Follow us on LinkedIn to stay on top of SV funding intelligence and key players in the startup ecosystem.

Early Stage:

AirNexis Therapeutics closed a $200M Series A, a clinical-stage biotech company developing novel therapeutic drugs for the treatment of COPD patients.

LMArena closed a $150M Series A, an open platform where everyone can access and interact with the world's leading AI models and contribute to AI progress through real-world feedback.

Corgi Insurance closed a $108M Series A, a full-stack insurance carrier building better, faster insurance products for startups.

Array Labs closed a $20M Series A, building the next generation of space-based radar systems.

Topos Bio closed a $10.5M Seed, focused on AI-powered drug discovery targeting intrinsically disordered proteins.

Growth Stage:

xAI closed a $20B Series E, building artificial intelligence to accelerate human scientific discovery.

Soley Therapeutics closed a $200M Series C, , tech-enabled drug discovery and development company using cells as the world’s most powerful sensors to uncover first-in-class medicines.

EpiBiologics closed a $107M Series B, advancing a next-generation protein degradation pipeline and platform that targets extracellular membrane and soluble proteins.

Apella Technology closed a $80M Series B, an OR management platform providing hospitals with real-time operational intelligence to eliminate delays, distractions, and inefficiencies.

Canopy Works closed a $22M Series B, delivers comprehensive safety coverage through a seamlessly integrated system, delivered as a complete service.

CredPR is a strategic communications and growth firm that helps startups and technology companies scale their brand presence, attract investors, and secure meaningful media coverage. The firm combines public relations, content strategy, and community engagement to elevate innovators in competitive markets.

What CredPR Delivers

• Strategic media positioning tailored for technology brands

• Narrative development and content amplification

• Investor communications and visibility campaigns

• Integrated public relations support across channels

Why It Matters

In today’s crowded startup landscape, strong visibility and messaging are essential for growth. CredPR helps companies refine their voice, articulate their value, and reach target audiences with clarity and impact.

Who It Serves

Early-stage and growth-stage technology companies, founders seeking media traction, and teams looking to strengthen brand recognition and investor awareness.

CredPR builds momentum for companies that want to be seen and remembered. For more information, please reach out to: [email protected]

Asia Ca

Your Feedback Matters!

Your feedback is crucial in helping us refine our content and maintain the newsletter's value for you and your fellow readers. We welcome your suggestions on how we can improve our offering. [email protected]

Logan Lemery

Head of Content // Team Ignite

3 Tricks Billionaires Use to Help Protect Wealth Through Shaky Markets

“If I hear bad news about the stock market one more time, I’m gonna be sick.”

We get it. Investors are rattled, costs keep rising, and the world keeps getting weirder.

So, who’s better at handling their money than the uber-rich?

Have 3 long-term investing tips UBS (Swiss bank) shared for shaky times:

Hold extra cash for expenses and buying cheap if markets fall.

Diversify outside stocks (Gold, real estate, etc.).

Hold a slice of wealth in alternatives that tend not to move with equities.

The catch? Most alternatives aren’t open to everyday investors

That’s why Masterworks exists: 70,000+ members invest in shares of something that’s appreciated more overall than the S&P 500 over 30 years without moving in lockstep with it.*

Contemporary and post war art by legends like Banksy, Basquiat, and more.

Sounds crazy, but it’s real. One way to help reclaim control this week:

*Past performance is not indicative of future returns. Investing involves risk. Reg A disclosures: masterworks.com/cd

1